Tips for software development

Medical device manufacturers often have problems creating adequate software documentation. Possible causes are that the development departments

- were newly formed and/or the employees have no experience in the regulation of medical technology,

- instead of specifically eliminating the cause, only complicate the development processes and documents.

Background to regulation

Does the authority want to annoy the development departments with unnecessary documentation by means of norms, standards and regulations or even shut down the departments and thus slow down new developments and innovation? No, the background to the regulations is to enable manufacturers to work in a structured manner and to ensure that the development results are

- Secure (in the sense of security and safety) and

- Maintainable (the software is not only dependent on a few 'key' employees).

How can the documentation be structured as effectively as possible - but still be compliant?

This question is the focus of this blog post. The aim is to present a documentation strategy that meets the regulatory requirements in audits without burdening or even hindering the development departments with unnecessary effort.

In addition, the article offers concrete suggestions on how to set the right course right from the start of a new development - with the aim of minimizing subsequent costs and rework.

FDA guidance documents exist for the USA. Although these are formally non-binding recommendations, they reflect the expectations of the authority. In practice, compliance is strongly recommended unless equivalent alternatives are justified and documented in detail.

- Good documentation practice (GDP)

- Objective Evidence

- High Level Architecture

- System Requirements

- Traceability

- Traceability top vs. bottom

- Traceability right vs. left (V-model)

- Traceability SW Release vs. documentation

- Traceability to hardware versions

- Impact analysis

- System Architecture

- SW Architecture

- Suitable SW Architectures - Vertical Architecture

- Segregation

- Detailed Design

- Unit Tests

- SOUP vs. OTS Evaluation

Good documentation practice (GDP)

The American regulatory authority FDA places a very high value on GDP. Complaints are quickly lodged as soon as inconsistencies or different styles are discovered.

What is GDP?

This means that documents are created and managed according to standardized criteria. Manufacturers often have problems with the following criteria:

Date format

In German-speaking countries, it is typical to write a date in German instead of American format, i.e. DD.MM.YYYY instead of MM/DD/YYYY. In some cases, the years are only given in two digits. In contrast, automatically generated reports are then provided with a different timestamp format. This can quickly lead to confusion about the date when a specification or report was signed, e.g. 12.10.11. This means that the auditor / reviewer can no longer clearly assign the order in which the specification and reports were generated.

Note

Against the background of internationalization and approval in various countries, it is advisable to use the ISO 8601 standard throughout the company, which defines the date format DD-MM-YYYY.

Empty fields / Empty cells

Non-relevant fields within tables must be marked as not relevant. An auditor requires a justification as to why these fields are not relevant. An 'N/A - [reason]' is therefore recommended.

Employee abbreviation

In medium-sized and larger companies, abbreviations are often introduced to identify employees in documents, systems or processes clearly, briefly and efficiently. It usually consists of a combination of letters (e.g. initials of the first name and surname) and sometimes numbers or other codes. It must be ensured in the company process and ideally through validated software tools that abbreviations are unique. This means that employees with the same initials do not use the same abbreviations. This also applies to former employees - abbreviations must NOT be reused!

Abbreviations

Every company uses abbreviations to reduce the use of longer terms and speed up the flow of information. These abbreviations are not obvious and unambiguous for new employees, outsiders and sometimes within the company. A global glossary is therefore a good idea, which should contain all abbreviations.

Objective Evidence

Behind the somewhat unwieldy term 'objective evidence' is the requirement to prove that

- the individual tests were actually carried out, and

- the test object (SUT / DUT = Software / Device under Test) is clearly identifiable.

What needs to be documented for this?

Test object:

It should be clear which is the SUT / DUT (Software / Device under Test). Ideally, a photo / snapshot of the software version displayed in the user interface (UI) or transmitted via the data interface is taken and documented. This can be the initial test case or always the first action before the actual test.

With unit tests, it is much more difficult to document the software version / revision used - possibly via the SVN properties or documentation of the log of the checkout.

Documentation of tests

For individual tests, the question often arises as to what exactly should be documented and whether a recording or similar is necessary at all. For overall system tests with performance requirements, it makes sense to record a video (e.g. closing of an electrical clamp due to bubble detection in the hose). Video recordings are rarely necessary for unit tests or software tests. Nevertheless

the proof must be designed as if the test results were disclosed externally - with the aim of ruling out manipulation. Example: When testing a specific, flashing alarm, a photo is sufficient to show that the alarm was actually displayed. An automatic mechanism can also be built in, which automatically takes snapshots after each test step.

You can find detailed information here:

- FDA Guidance Document: "Content of Premarket Submissions for Device Software Functions"

- IEC 62304:2006+ A1:2015 "Medical device software. Software life-cycle processes"

- FDA-Guideance: "General Principles of Software Validation"

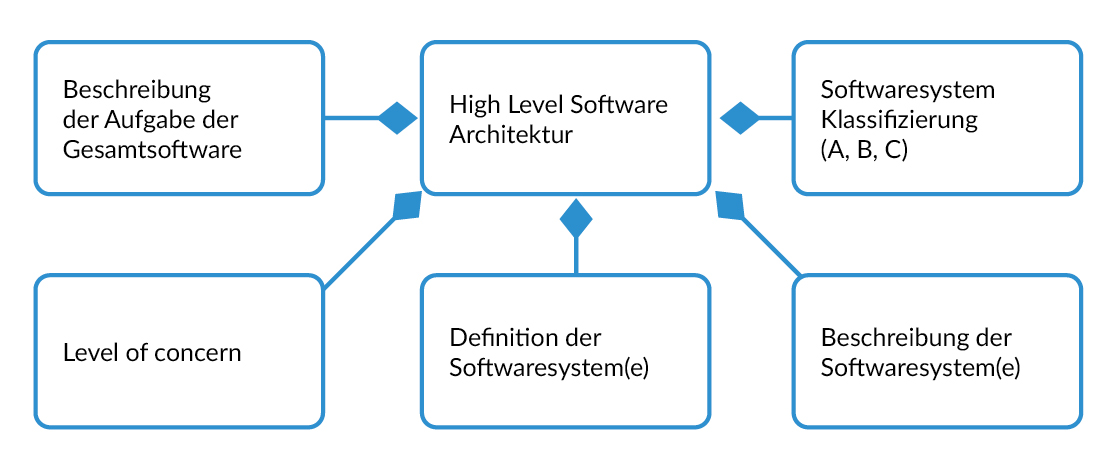

High level architecture

To ensure that software developers, auditors, authorities and other departments (quality management, regulatory affairs) quickly understand the software, it is advisable to create a high-level architecture document containing the following points:

- Overview of the various SW systems

- A brief description (at high-level) of the entire software and the individual software systems

- Software classification according to IEC 62304 (class A, B, C) of each software system.

- Level of concern according to FDA Guidance 'Content of Premarket Submissions for Device Software Functions'

Note

- The evaluation of the SW class and level of concern can also take place in this document.

- It is advisable to define one SW system for each microcontroller. This makes it easier to prove segregation in accordance with IEC 62304 Chapter 5.3.5 '...that such segregation is effective'. In the case of stand-alone software, it is often necessary to define a single software system.

Ideally, the high-level architecture document is created by the system architects before the start of software development and formally corresponds to a system architecture document.

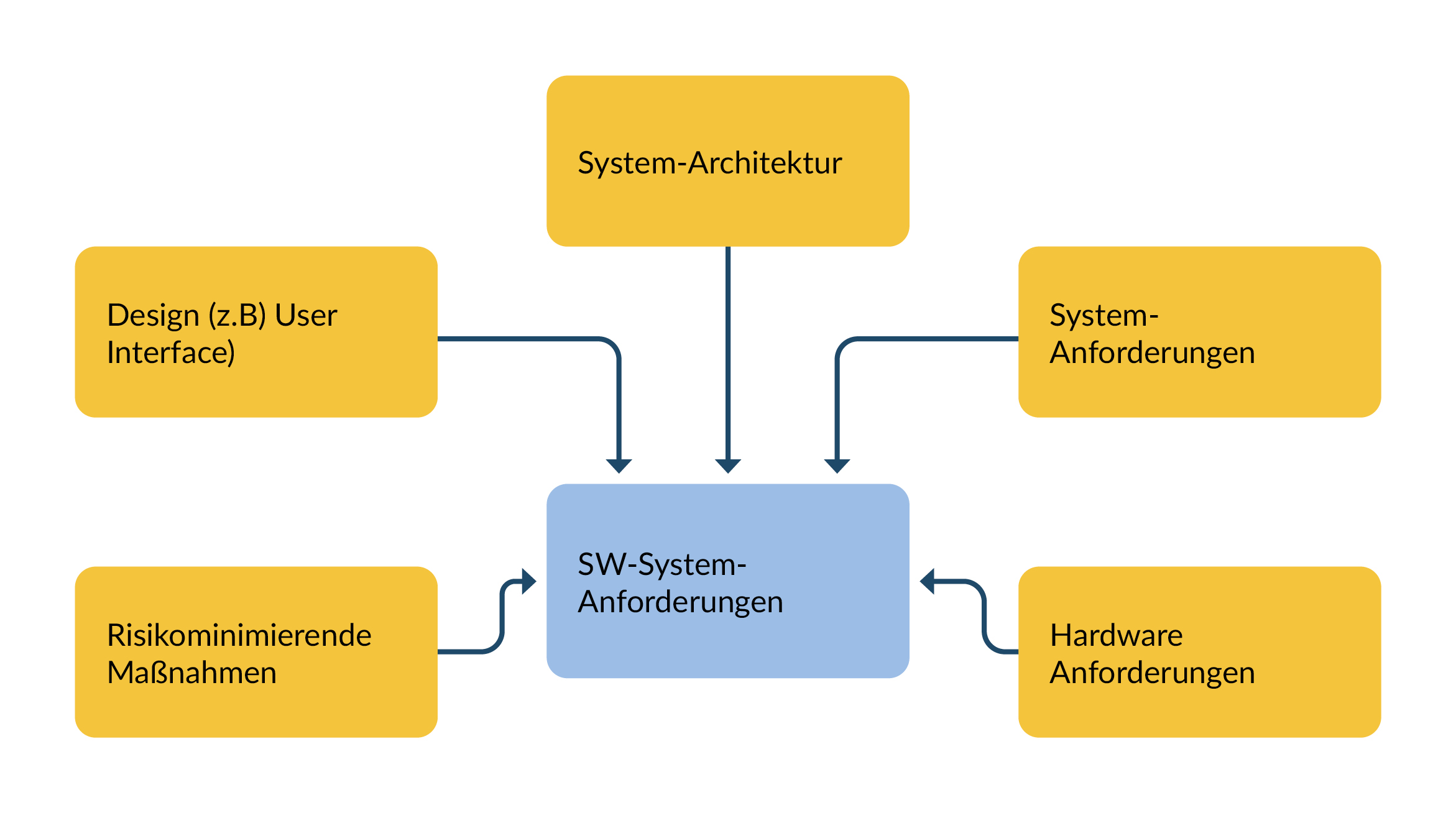

System requirements

This section is dedicated to the input for the software system requirements. The background to this is that the involvement of stakeholders and the creation of specifications are sometimes 'forgotten' and the software requirements are therefore incomplete or invalid.

The collected requirements for a software system are derived from

- System requirements,

- System architecture,

- User interface (UI specification) and

- Electronics

When creating system requirements, the requirements for

- Debug interfaces

- Program logbooks (e.g. for saving crashes incl. trace)

are regulary needed.

Figure 1 Sources of SW system requirements

On the other hand, the electronics group should ideally develop together with the software group. The development results must be documented. Components include:

- Power up and down mechanism

- Properties and parameters of controllers

These results should be incorporated into architecture and design requirements, from which software requirements can be derived.

It is advisable to document a justification for all parameters and what the trade-offs (purchased disadvantages) are (e.g. overshoot behavior of controllers or dead times). These justifications are important for future generations of developers and for the maintenance of medical devices.

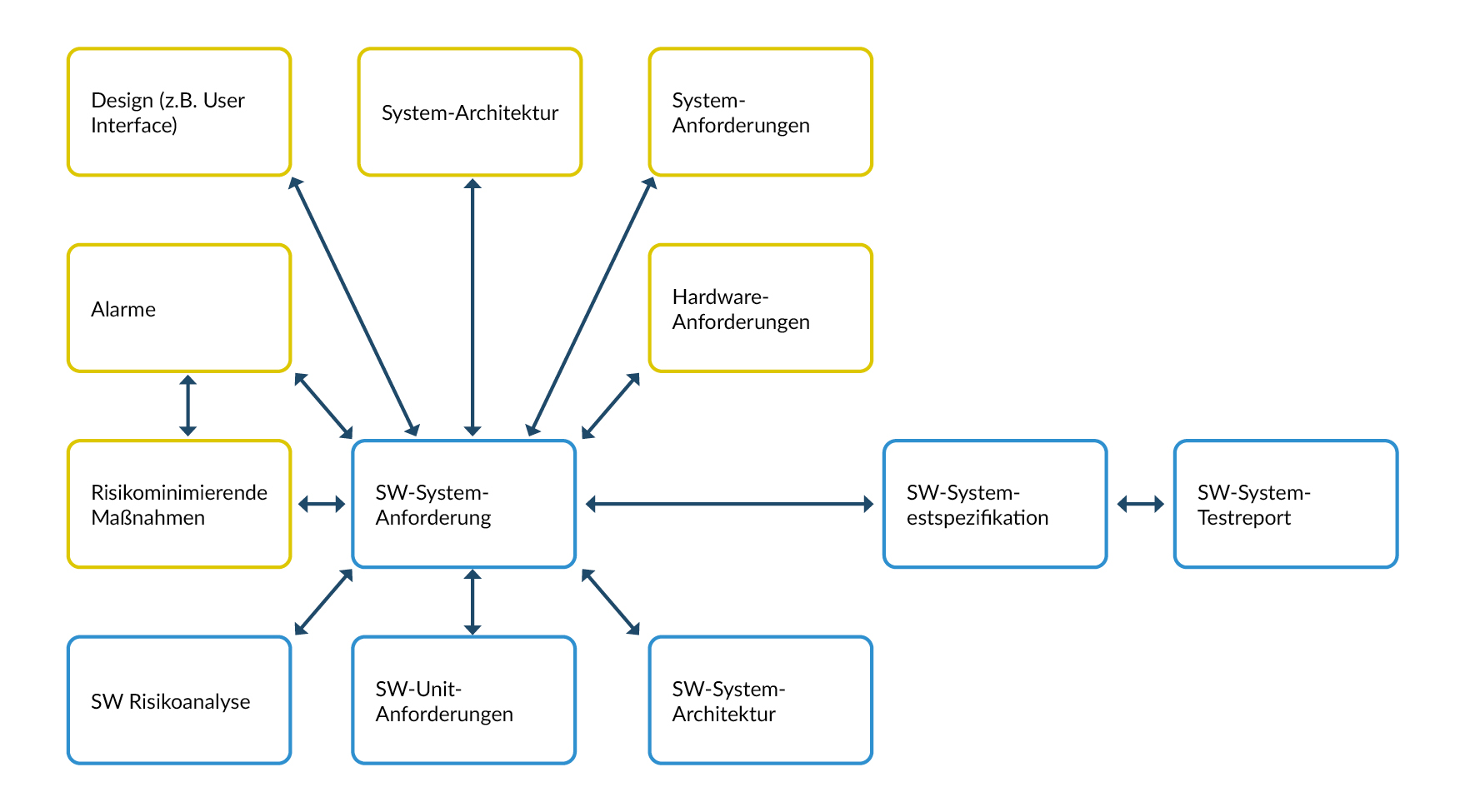

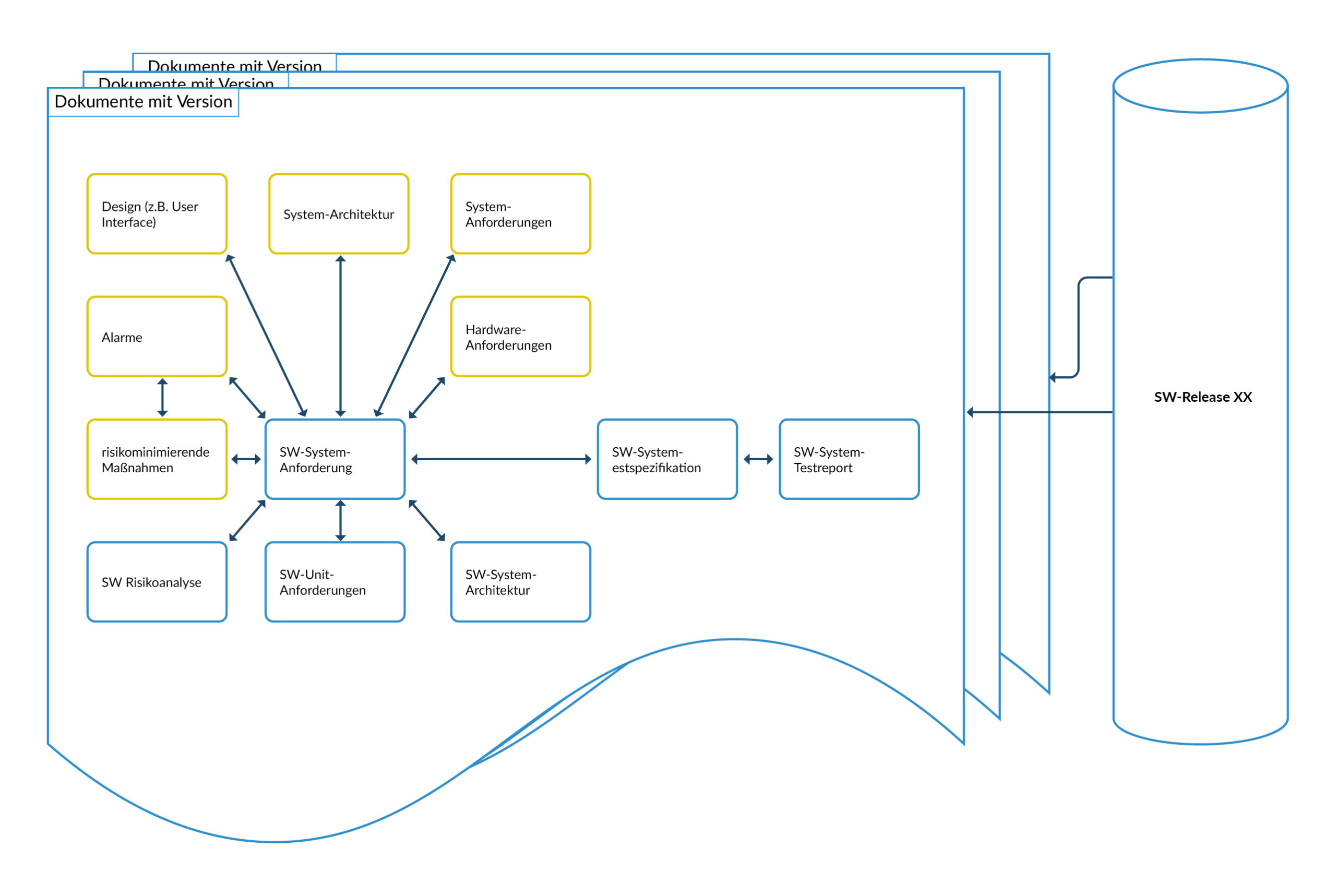

Traceability

The term "traceability" refers to the systematic documentation of the relationships between the individual development results - with the aim of being able to clearly trace each input back to the corresponding output.

Figure 2 Possible relationships at SW system level

Figure 3 Traceability SW document versions to SW releases

Traceability top vs. bottom

The requirement is that

- the requirements should be derived from higher-level requirements (example: software requirements must be derived from system requirements, architecture and specifications).

- high-level requirements refer to SW requirements

Traceability right vs. left (V-model)

The relationships between requirements, test cases and test results are as follows:

- Test cases must be derived from requirements.

- Test results must originate from test cases.

- Requirements must refer to test cases.

Traceability SW Release vs. documentation

Software development is usually incremental, meaning that several software releases are created over the course of the project. The software is also developed further during operation. This means that multiple software versions are created during the life of the medical device. The same applies to the documentation, but not every document is adapted. It is therefore important to define which documents belong to which software release before development begins:

- It must be clear which documents and document versions belong to which software release.

- Test reports must be identifiable to a software release or a version of the unit. Objective proof (evidence) must be provided in this case.

Traceability to hardware versions:

Software development is much more volatile than electronics development. In the maintenance phase, changes of electronic components due to discontinuations are the order of the day. The software will therefore encounter different hardware, even in the field. It is therefore important that

- the hardware and hardware version used can be recognized in test reports,

- an evaluation takes place of the impact of a software change on existing hardware and

- an evaluation takes place to determine the impact of a hardware change on existing software.

Impact Analysis:

Prior to the actual development work, an impact analysis must be carried out to evaluate the effects of the change. The following effects, among others, must be considered and documented:

- Existing requirements

- existing test cases

- the SW units (hereinafter referred to as "units")

- the risk file

- the SW classification

- the security

- the Instruction for use

- Service instructions / Service manual

- the production

- the hardware

- existing hardware versions

It is advisable to provide evidence of the implementation of these planned activities.

Conclusion

Traceability and its aspects should be considered before the start of development and an initial rough concept should be drawn up, which is refined during the course of development. In the case of changes and bug fixes, it is essential to create an impact analysis before implementation.

System architecture

A system architecture is often focused on aspects such as the requirements of IEC 60601-1 (proof of functional safety, first fault safety, essential performance characteristics, etc.). Unfortunately, the software aspects are forgotten. A good system architecture for the software must therefore achieve the following:

- The division of Programmable Electrical Medical System (PEMS) and Programmable Electrical Subsystem (PESS). It is also helpful to declare a PESS as a software system. If there are several microcontrollers on a PESS, it is better to declare a software system for each microcontroller.

- Declaration, which corresponds to a software system.

- Definition of the interface between software systems (incl. runtime behavior). Although software development specifies the interfaces, these are part of the system architecture

- Definition of what a software system should do and for which (sub)tasks it is responsible (high level)

Software architecture

It is often unclear to the development department what is behind the software architecture required by IEC 62304.

In practice, this often results in extensive, difficult-to-maintain documents that do not even meet the minimum requirements of the standard.

- We therefore want to provide a compact explanation of the architectural elements that a software architecture document must contain - with the aim of making the actual effort transparent and manageable. To ensure that the architecture does not miss its actual target, the following pitfalls should be avoided ...The documents explain the interaction of classes instead of mapping a higher-level system structure.

- The documents contain process steps, although these are not part of the architecture.

- Colorful images that do not contain a standardized language (e.g. UML) or an explanation of the various colors, symbols and strokes.

- Non-readable images:

- Font is too small

- Diagram is overfilled

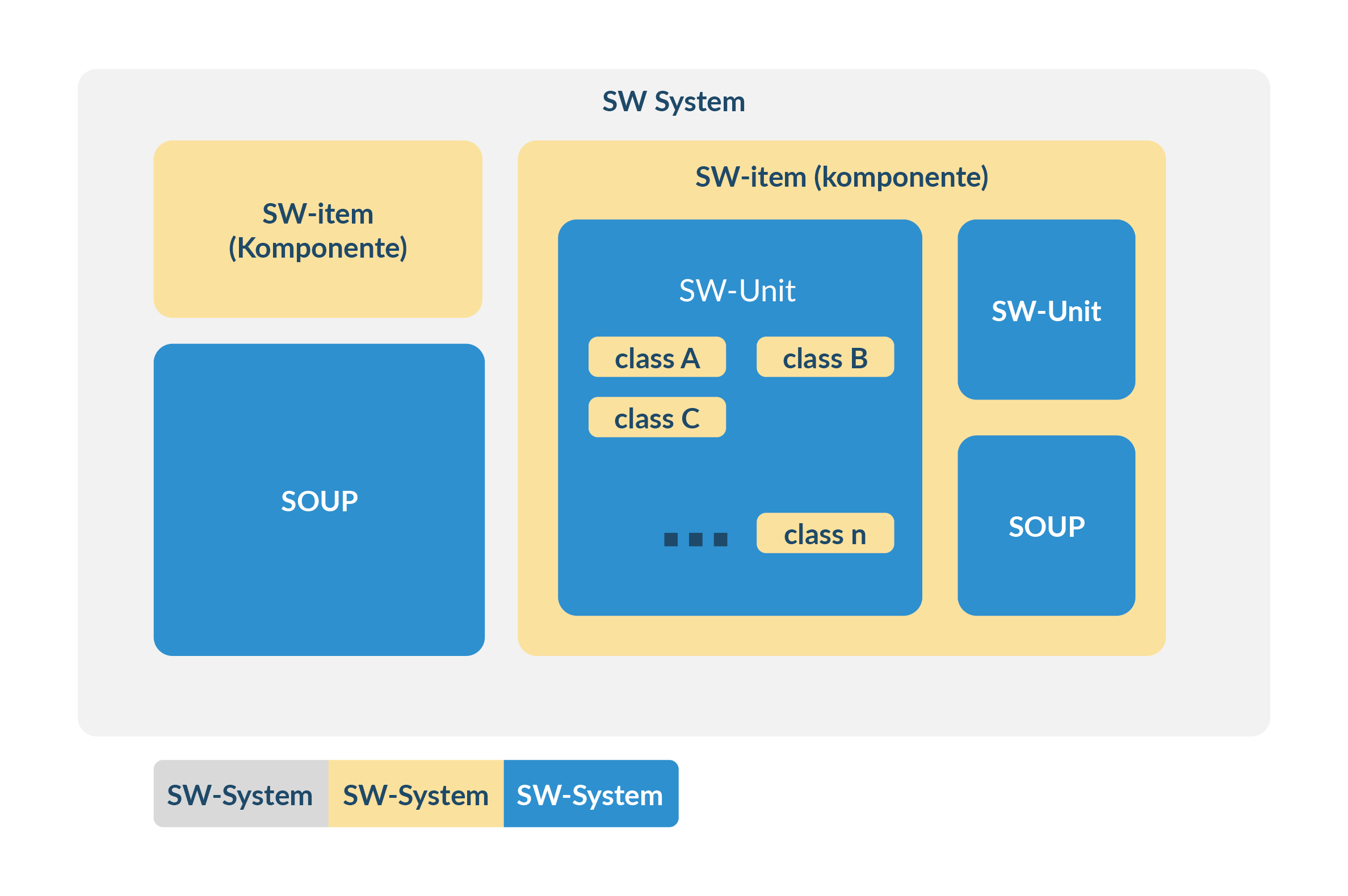

What makes good architecture?

- Correctly represents the components 'SW system', 'SW item' to 'SW unit' required by the IEC62304 standard (using exactly these terms)

- Interfaces between SW systems, items and units are described (e.g. in a referenced document)

- Brief (high-level) explanation of what the task of the component is

- Design decisions should be available - ideally separately). This also includes alternatives that were deliberately not implemented or rejected. A justification helps the developers in the maintenance phase or in the subsequent product.

- A formalized language can support readability and verifiability

- Each diagram contains

- a context, so that it is clear what it is about

- a legend if special syntax / colors are used

- Explanation for the use of design patterns, including reasons why this particular pattern was chosen and not others (this supports future developers in the maintenance phase)

- Thoughts on tracing the causes of faults - even during the operating phase of the medical device.

- Use of off-the-shelf software / SOUP (software of unknown origin)

Note

Select the scope of the units depending on the risk class of the software system. For more critical systems, it is recommended to break down the architecture to a finer level - i.e. to define smaller, but more units. This can save work for the documentation and it is clear to the authority why savings were made there.

It should be noted that IEC 62304 requires verification of the architecture. A verification plan must therefore be defined BEFORE the first version of the architecture is released (ideally in the SW development process or SW development plan). A checklist is a possible variant for verifying the software architecture.

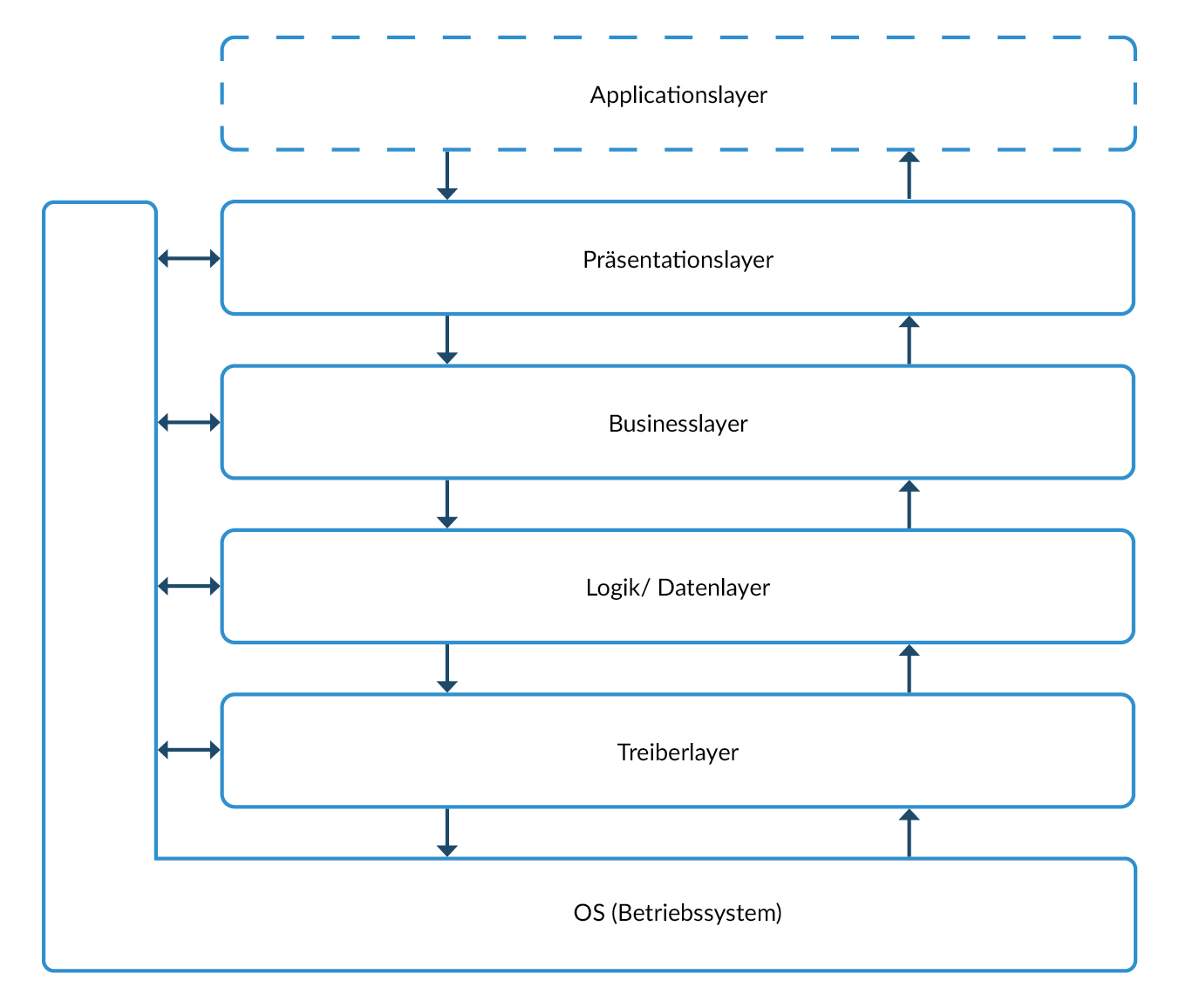

Suitable architectures

Software development is often based on layered architecture. This type of architecture is characterized by very broad interfaces between the individual layers. In contrast, the individual functions within a layer are often only weakly connected. Creating units according to IEC62304 is very complex here, as many interfaces have to be taken into account and many functions have to be implemented at the same time.

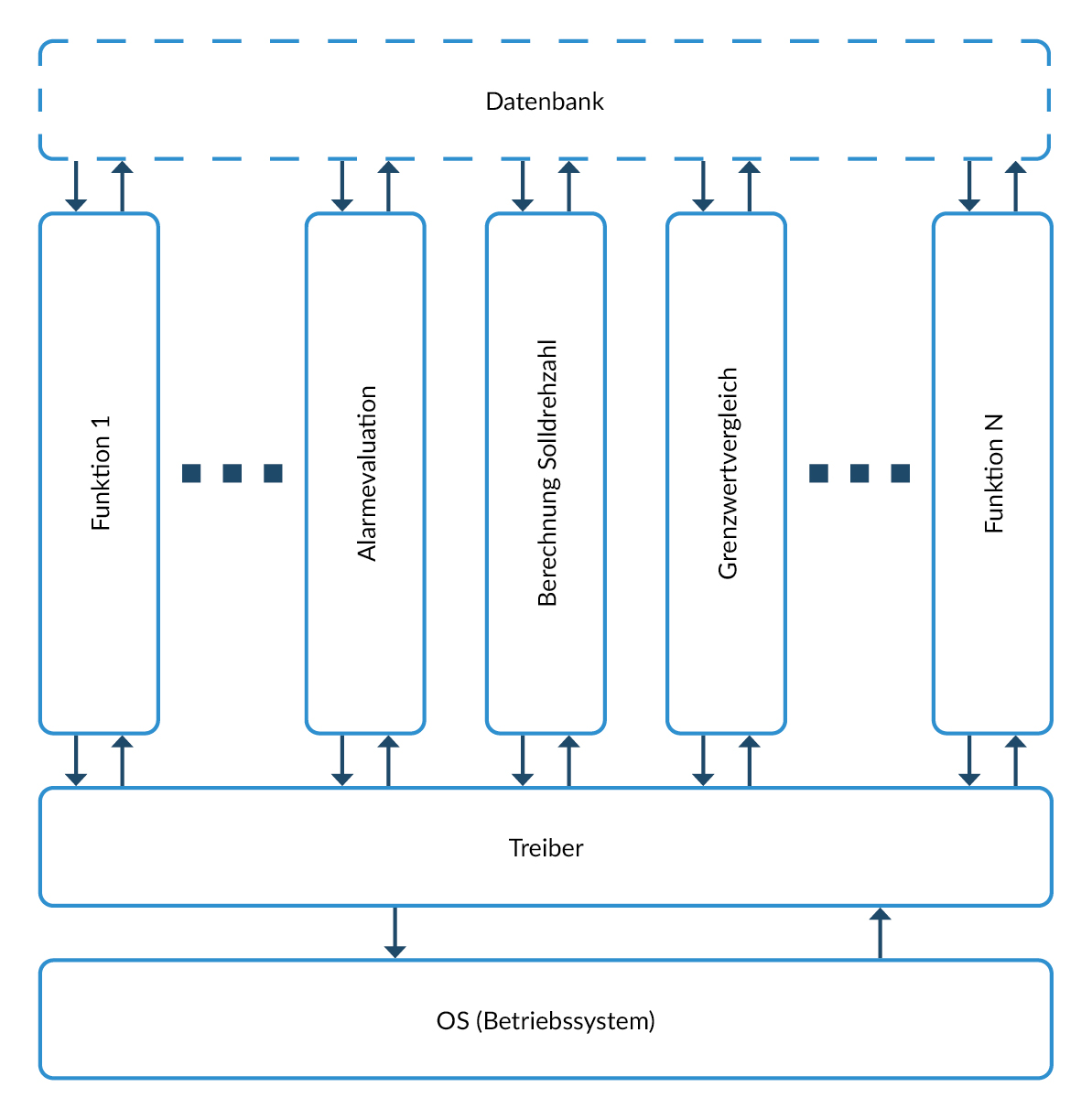

An alternative is a vertical architecture that forms complete functional units that run through all layers without major dependencies on other functional units.

Advantage of the vertical architecture in the context of IEC62304: one or more coherent functional units of the vertical architecture form a unit, which offers the following advantages:

- Limited number of interfaces

- The function is already described in the higher-level specification. Only the interfaces need to be adapted. This means that unit tests already check a large part of the functions of the entire system, which enables errors to be detected at an early stage.

In addition

- functionality can easily be added at a later date - simplifying maintainability.

- Software integration tests (required in IEC62304) are easily possible: several units are executed.

Figure 4 Example of a layer model. The interfaces of individual layers are often very broad.

Figure 5 Example of a vertical software architecture

Segregation

IEC 62304 requires documented segregation for different software classes, i.e. a logical or functional separation of the corresponding software elements. This must be evaluated in the system architecture or an appropriate document.

On the other hand, it is much more difficult to classify components that run on the same microcontroller / server / processor differently. A clean segregation must be found argumentatively and architecturally. For example, it is not possible to assign a component (e.g. logging) to class A if a class B or C software component is running in parallel on the same microcontroller.

Why? Class A software is verified to a much lesser extent (not at all at unit level - except in the FDA context). An example: A pointer error in the logging component could overwrite memory areas of safety-critical class B/C components unnoticed - with potentially dangerous consequences.

Is it still possible to place class A components in parallel with class B or C components in the software system?

In principle, this is possible if the segregation of one software from the other is ensured.

What are the options for segregation?

Spatial segregation:

This is achieved when components run on different microcontrollers and the memory areas are separated.

Alternatively, spatial segregation can also be realized by using operating systems on powerful processors that enable a clean separation of components.

Typical examples are

- Hypervisors (known from cloud and data center environments)

- Container technologies

- Virtualization solutions

Note

Spatial segregation is ideal for minimizing the risk of cybersecurity vulnerabilities.

- Temporal segregation:

This segregation method can only be executed, for example, before the application is started (bootloader) and post-application, if it can be ruled out that the application and the class A function interact in terms of data.

Detailed design

Chapter 5.4 of IEC 62304 requires a detailed design for Class C software units. If approval is sought in the USA, the detailed design must be created in such a way that no ad-hoc decisions are made by the developer:

"A complete software design specification will relieve the programmer from the need to make ad hoc design decisions"

(FDA Guidance, General Principles of Software Validation , Chapter 5.2.3).

Furthermore, the FDA requires that there must be traceability between the unit tests and the detailed design. (FDA Guidance, General Principles of Software Validation, Chapter 5.2.5)

Recommendations

- The detailed design should be written as a requirement.

- The detailed design is described via the unit's interfaces.

- Use of a standardized language to describe, among other things, runtime behavior (e.g. UML).

- Unit tests cover the requirements of the detailed design.

Note

As soon as further requirements, internal logic (e.g. state machines) or components are specified within a unit, the unit is considered open in accordance with IEC 62304 and becomes an item (component). In this case, complete documentation of all subordinate units is required.

Figure 6 Principle of the architecture

Unit tests

Traceability

Like all software tests, unit tests should be carried out automatically. If the US market is potentially the target country of the medical device, the somewhat older FDA guidance document 'General Principles of Software Validation' in chapter 5.2.5 states that traceability must be provided between the detailed design and the unit test cases:

- Test Planning

- Structural Test Case Identification

- Functional Test Case Identification

- Traceability Analysis - Testing

- Unit (Module) Tests to Detailed Design

- Integration Tests to High Level Design

- System Tests to Software Requirements

- Unit (Module) Test Execution

- Integration Test Execution

- Functional Test Execution

- System Test Execution

- Acceptance Test Execution

- Test Results Evaluation

- Error Evaluation/Resolution

- Final Test Report

Excerpt from FDA Guidance Document 'General Principles of Software Validation' Chapter 5.2.5

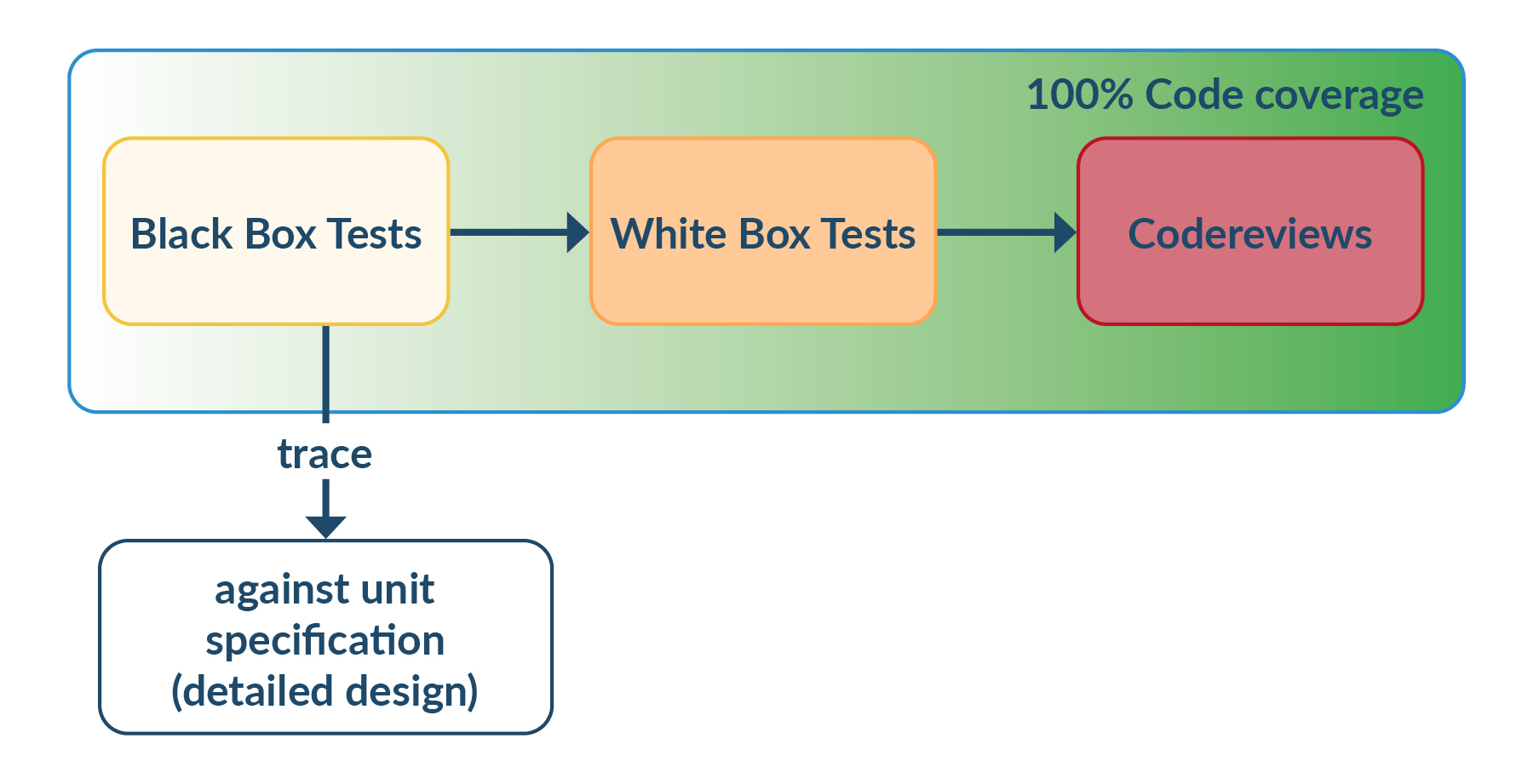

Test coverage (code coverage)

Furthermore, unit tests must achieve 100% test coverage:

"... 'Use of the term "coverage" usually means 100% coverage'... "

[FDA Guidance Document 'General Principles of Software Validation', Chapter 5.2.5].

With defensive programming, 100% test coverage cannot be achieved. Should defensive programming therefore be avoided? Does the source code have to be modified so that 100% test coverage is possible? Or should it take months to achieve the final 10-20% test coverage?

No! That is not the solution.

With a normal test development effort, a reasonable test coverage can be achieved for unit tests (approx. 80% is realistic, drivers are an exception). Black-box tests of the unit should be used wherever possible.

The source code should then be opened and the coverage further increased by means of whitebox tests. Of course, these whitebox tests cannot be derived from requirements - but they are very valuable as regression tests for future test executions. Until 100% test coverage has been achieved, code reviews must be defined in the software development plan and, of course, carried out.

Figure 8 Test procedure to achieve 100% code coverage.

Another approach is the risk-based approach in order to test critical units more deeply and broadly. The minimum test coverage required for each risk must be defined in advance.

Note

Since in modern software development the source code is subject to a code review during development anyway (an example is the git merge requests), it can always be argued that a rudimentary review has already taken place. Inclusion in the process landscape (e.g. software development plan) is mandatory.

Test coverage - types

The FDA guidance document General Principles of Software Validation (chapter 5.2.5) describes different types of test coverage. Three of the most important types of coverage are listed below:

Statement coverage:

The FDA Guidance document specifically states that the statement coverage is considered insufficient 'its achievement is insufficient to provide confidence in a software product's behavior'.

Decision coverage:

Decision coverage is considered acceptable as a criterion for test coverage for most software products: 'It is considered to be a minimum level of coverage for most software products, but decision coverage alone is insufficient for high-integrity applications'.

Condition coverage

It is recommended to use MC/DC Modified condition/decision coverage (multiple condition decision coverage).

SOUP vs. OTS evaluation

Use of 3rdparty software

For software development, it makes sense to rely on libraries, operating systems and existing source code so that development can be simplified and accelerated.

Before using the 3rd party software, a formal evaluation must be carried out to determine which software should be used. The requirements that the software development has for the 3rd party software must be defined and possible variants listed. In a deeply embedded environment, bare metal (i.e. without any operating system) can also be used as an alternative development variant.

An important aspect in the selection of the operating system is the possibility of debugging and logging, so that software development and subsequent error analysis of software or devices in the field are simple and fast.

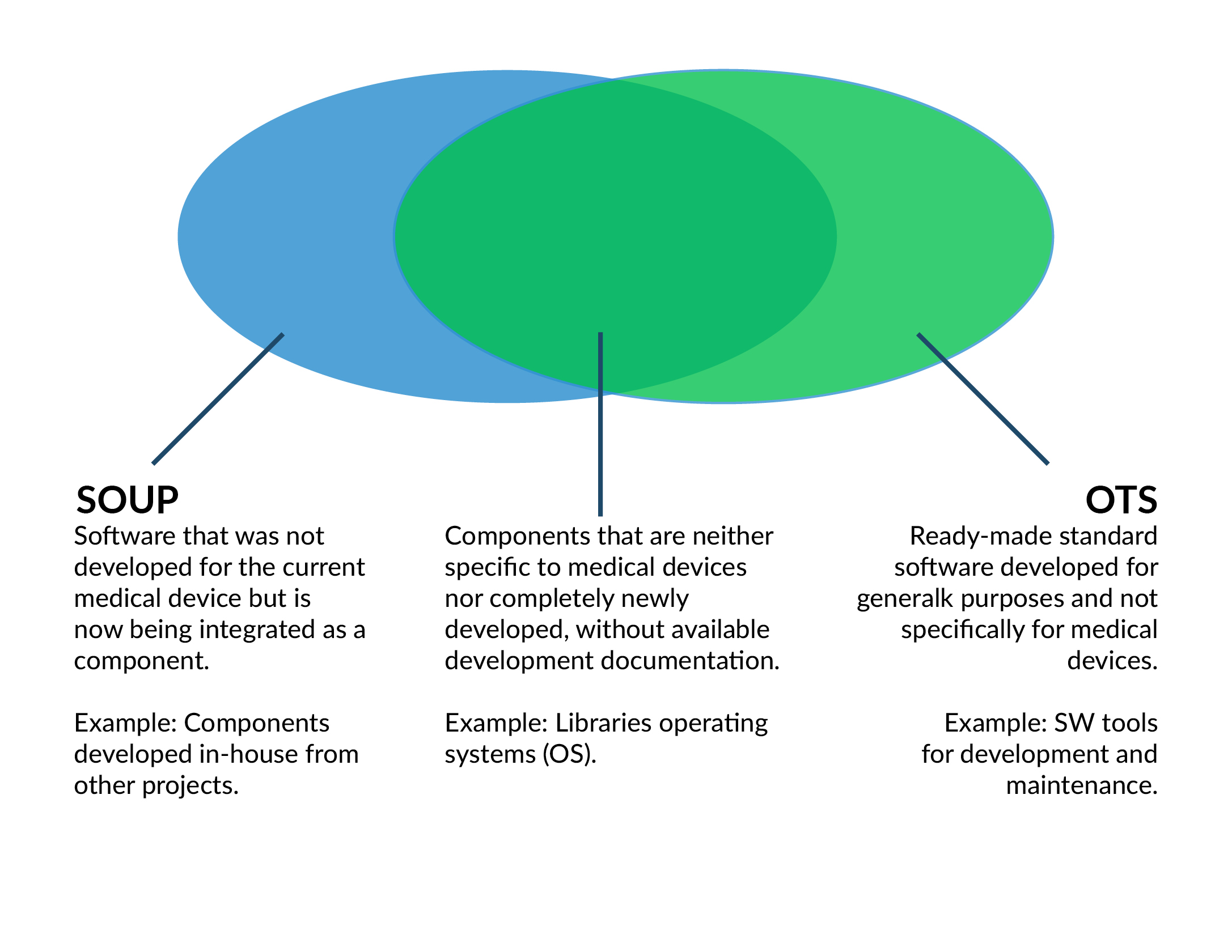

SOUP vs. OTS software

For software that was not developed for medical devices, the requirements of IEC 62304 and FDA (USA) differ.

SOUP means: Software of Unknown Provenance

OTS means: Off-the-Shelf [Software]

The two terms appear to be equivalent, but there are minor differences in the details:

Figure 9 SOUP vs. OTS software with a large overlap.

Nevertheless, the superset covers most of the software used in a medical device (also stand-alone).

The differences lie in the effort required for the evaluation - and thus the internal approval for use in a medical device.

Depending on the criticality of the software in question, the FDA requires measures which, in extreme cases, can extend to an audit of the manufacturer of the OTS software.

In practice, however, this is hardly feasible in most cases - e.g. with manufacturers such as Microsoft or Google - as these providers generally do not provide insight into their development and quality processes, nor would they allow individual audits of medical device manufacturers.

IEC 62304 requires (incomplete list) for SOUP components:

- Configuration management

- Specify & verify risk & requirements of SOUP

- Checking the system requirements (RAM, CPU,...)

The FDA guidance document: "Off-The-Shelf Software Use in Medical Devices") expects the following from manufacturers (list incomplete):

- Based on the 'Level of concern' (basic or enhanced), the enhanced level requires an audit of the OTS software developer "[...] this may include a review of the OTS software developer's design and development methodologies [...]" [Section III.D.1]

- OTS Evaluation Report incl.

- Risk assessment

- Configuration management

- Setting up and checking the requirements for OTS

- Description and life-cycle of the OTS

Recommendation:

When selecting the SOUP / OTS, it is sometimes possible to obtain test protocols and similar documents from the software manufacturer. This makes it easier to evaluate the OTS (example: SafeRTOS instead of FreeRTOS).

Alternatively, risk control measures can be used to reduce the classification of the OTS so that an audit of the manufacturer is no longer necessary.

Conclusion:

The effort involved in an OTS evaluation is considerably higher than a SOUP evaluation. As the FDA generally requires an OTS evaluation as part of a US approval, it is advisable to consider these requirements at an early stage and integrate them into the software development process.

Clean risk management can help to reduce criticality and thus enable the use of 3rd party software.

It should also be evaluated whether the libraries / an operating system are really necessary or whether the development can be realized e.g. on bare metal especially for deeply embedded.